Its always depressing to see fanbois bleating and moaning about their beloved technology or piece of bling not being universally liked. This is normally put down, in a wonderfully immature way, to the failure of "the other side" to see their point of view rather than any innate failings of their beloved approach.

SOAP is not Dead - Its Undead is a classic of the genre.

Why hasn't REST succeeded in the enterprise? Not of course because it isn't actually any better than SOAP for enterprise scenarios and is indeed much, much worse in many. Nope.

But first there is the reason why REST is successful

His presentation showed that 73% of the APis on Programmable Web use REST. SOAP is far behind but is still represented in 17% of the APIs.

This is like going to France, doing a language survey and declaring that French is the most popular language in the US. So what would doing the same query on the likes of Oracle, SAP, IBM or Microsoft's enterprise technology stacks deliver? I assumed the number to beat would be huge and got ready for some serious searching.... but the number to beat is

2368... errr seriously? I've worked in

single enterprises where they had more SOAP endpoints than that. When you include the libraries of WSDLs from SAP and Oracle and the Behemoth that is

Oracle AIA has so many that Oracle don't boast about it as it might make it look complicated. Back in 2005 folks at Oracle boasted about over 3000 web services across their applications. Now before people bleat about this being proof of SOAP complexity... that just makes you a hypocrite if you on one hand use the ProgrammableWeb stats as "proof" of RESTs success but then try and use the massive volume of WSDLs out there as proof of SOAP's "complexity.

I'm also here not even into the global standards that use SOAP every day for B2B, people like

SWIFT,

Open Airlines... shall I go on and on? 2400 APIs is a success? SOAP isn't anywhere near that? Like I say its like going to France and claiming French is the most spoken language on the planet.

All this just proves what I've said for a long time. REST works for information traversal, but its not set up for the enterprise. So what is the issue with REST not displacing SOAP in the enterprise?

"All the tools, hires, licenses & codebase has been built around SOAP for a decade," Loveless wrote on Twitter. "Hard to turn on a dime."

Wow, the bare facedness of this statement is hard to beat.

REST has been kicking on this door for over half of that time and some folks argue that in fact it predates SOAP. So it really is bullshit to claim that its all the fault of tools & codebase. SOAP replaced old EAI approaches in a couple of years in new enterprise projects. We went from a situation with everyone in about 1998 doing proprietary EAI integration, with occasional CORBA for RPC, to everyone by 2002 doing Web Services with some JMS. People in 2005 were telling me that REST was the future and REST would win, and now SIX YEARS LATER people are bleating about 10 years of SOAP adoption...

If an approach is better for integration in the enterprise it will be adopted. REST isn't better, yet, for enterprise integration because it fundamentally remains a developer approach not a professional enterprise approach. SOAP isn't complex, technically it might suck (hell

my father said "great we've now got enough processing cycles to burn that ASCII-RPC has finally made it") but conceptually its simple, and when managing complex estates with lots of different people that conceptual simplicity on the head.

Michael Cote of Redmonk hits the nail partially on the head when he says

"As enterprise development teams start including cloud technologies in their applications, incompatible cloud platforms and APIs will be a huge road block," said Michael Cote, analyst at RedMonk. "We're already seeing a clamoring for tools and services that integrate this spaghetti bowl of end-points, and they're only going to become more important to realizing the benefits of cloud development."

In other words the lack of a formal contract and standard interface mechanism remains the

real reason why REST isn't being adopted in the enterprise.

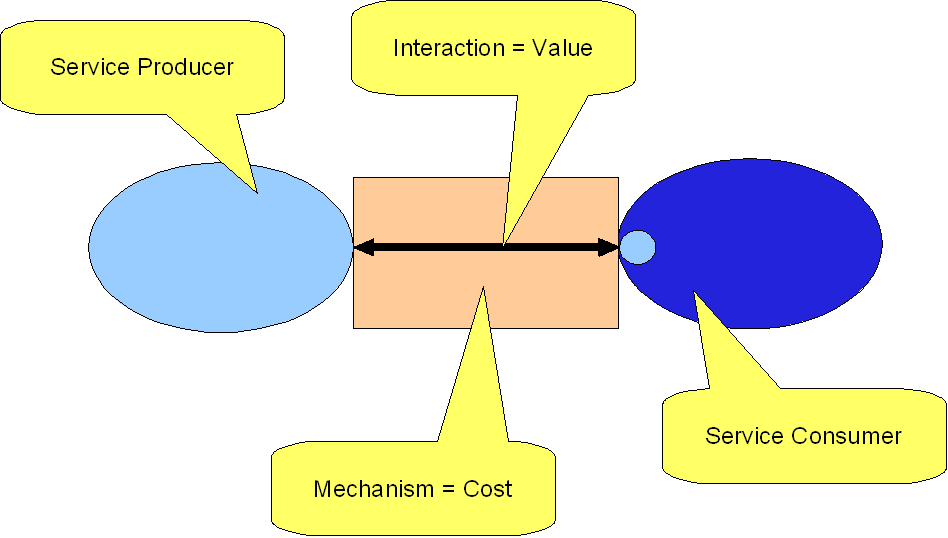

What SOAP did was solve a problem that the enterprise had.

How do I describe integration interfaces so my systems on different technology stacks can communicate and do so in a way that enables my teams to work independently of each other. REST does not solve this problem in an effective way and bleating about "dynamic interfaces" being "better" misses the whole point of what has made B2B and Machine 2 Machine integration successful down the years, namely a focus on people-centric approaches rather than technical centric ones.

Unfortunately with REST there appears to be an active movement to stop this professionalism creeping into it and defining new standards that will actually make REST better for the enterprise.

REST needs a standard way to publish its API and for a way to notify changes in that API. This is a "solved" problem in IT but for some reason the REST community appears to prefer blaming others for the lack of enterprise success of their technology rather than facing up to the simple reality:

SOAP got some things right and the biggest thing it got right was a shareable and toolable contract (WSDL) which enabled interfaces to be published in a standard way which included by functional and data standards.

SOAP isn't undead, its very much living in the enterprise and indeed being the only real viable approach when integrating package solutions from a number of vendors (a massive piece of enterprise IT). REST however barely registers, less than 2500 APIs after all these years of development? Pathetic.

REST for the enterprise isn't undead... its been still-born for over five years.

Technorati Tags: SOA, Service Architecture