At dinner the other night with a friend we were talking about what we wanted the iPhone to do. We agreed that a new camera would be good but neither of us thought that a forward facing camera had any point as we don't know anyone who has made more than two video calls despite having phones that could do it.

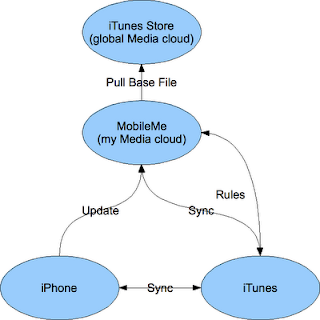

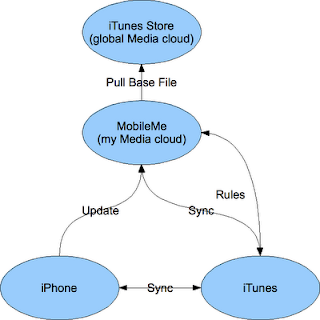

So what we thought would be really good for the 4th Generation iPhone? In particular what could Apple do to re-enforce their strengths over the telcos and use it to leverage people in the Mac. The answer we decided would be in how Apple look to exploit iTunes, MobileMe and the store. Increasing storage is of course something that will happen but really whether its 32GB, 64GB or even 128GB you still won't have enough storage for every video that you might want to see, and its video that really takes up the space. Podcasts move in a close second for their huge files and the challenge of course is that you can only sync when connected to your base machine. The addition of buying over both WiFi and now 3G potentially heralds a new direction however and one in which Apple can use the cloud in a positive way and in such a way that sets up an additional contractual revenue stream for Apple outside of the telcos control.

The answer is MobileMe, currently it is (IMO) a pretty basic service with minimal value, sure you can do contacts and the like but for those people who use an iPhone at work they already get that sort of service via Exchange.

The other piece is Apple's other "cloud" solution.... iTunes. Millions of connections, billions of downloads, its a cloud of music, video and application content. Like a pre-populated S3. In addition Apple know what you have bought from them, they don't currently recognise what you haven't bought, but the point here is to increase the apple lock-in.

So how could they combine a cloud like solution such as MobileMe with the iPhone/iPod Touch and iTunes? Well how about a very simple concept... infinite storage. Your iPhone no-longer just has 32GB it has a series of rules that say what you want locally, e.g. the last 5 unlistened to podcasts, 5 oldest unwatched episodes, etc. Now these rules get applied on your machine and you fill up the 32GB iPhone. You then go out and start watching videos and listening to podcasts.

MobileMe then kicks in and starts in the background (its Apple so they can do this, you can't, they can) downloading the latest versions and locally deleting the episodes you've just listened to. Now sure it takes a while but you've got 5 episodes to get through and in the next 5 hours it should be able to get it all down.

The point here is that MobileMe doesn't need huge amounts of storage, if its an iTunes purchased element (e.g. an "unlistened to but purchased" list) then it just pulls from there, if its a podcast then Apple can get it in a fraction of a second and just stream it up to you and cache it in your MobileMe account. When you get back to your base station the same sort of sync occurs and not only is all your music et al backed up automatically to the cloud but it now gives you the feeling of "infinite" storage. Now the easy option for Apple would be to limit it to iTunes purchases, or at least do so for a basic MobileMe account, then for a Premium account you can backup and sync everything that you have, of course through a properly authorise account which answers the challenges of file sharing.

The UI side should be pretty simple for Apple as well, the Time Machine interface of multiple "levels" sounds like an ideal piece to replicate for a cloud browser, so you look at an album where you sync a track then "go back" to the full album then "go back" to all the albums you own by that artist. Throw in "genius" and Apple can start flogging you new tracks by indicating other levels that are available via the iTunes store.

What else could you do once you hit the cloud? Well things like

Animoto show what cloud can do from a compute perspective, why not have that synced directly from your phone via MobileMe? View it then post directly to YouTube without requiring any additional local storage. You can decide to save it locally if you want but you still have the video in your overall cloud view of content.

So after then what do you need? Well HD video is liable to become more normal on compact cameras and where cameras go the phones follow, so why not HD on an iPhone next year? Again this hits the storage side but again having a cloud solution would mean that you could quickly offload it to the cloud. If you want to do some local edits (iMovie for iPhone?) then you get a transcoded short clip back, this lets you edit away on the device in the basic way. Upload to the cloud "iMovie" and a full HD rendered video is then available for your viewing or sharing.

Now of course what you need here is iMovie, and how do you get iMovie? Oh yes you have to buy a Mac, so you buy the iMovie4iPhone application (ala Keynote) and the iMovie4MobileMe subscription to give you a nice joined up experience. If you don't want the rendering pieces then its all included but you just have to sync from your Mac to create and view the final HD video.

So suddenly there are a few pieces on your iPhone that just seem to demand that your next computer is a Mac. Not because the iPhone just works, but because its really nice the way the two worlds synchronise via the cloud. Hell throw in TimeMachine via the cloud as a weekly offsite to go with your local daily and suddenly Apple are selling you a media cloud. Use Apple TV in your lounge to view all the stuff from this cloud and have it "just work". So you create a video on your iPhone, edited from lowres transcoded clips, this is then pumped via your main Mac as an HD version which you can then watch on your Apple TV.

So while people are clamouring for lots more whizbang elements on the device we thought that this missed the point. The iPhone isn't

technically the most advanced device out there, its just the best device for

users. Asking for forward facing cameras and other technical gizmos which would mean the iPhone would match other devices on a tickbox misses the reasons why it is the current leader in its space.

The opportunity for Apple here is to use the cloud, and in particular MobileMe, as the central co-ordination point between all your media life. Apple should be able to commercialise that connection ($100 a year for this sort of functionality, I'd go for it) and can also use it to leverage themselves a stronger position in the home computer market.

Previously people had talked about the "central hub" in a home, whether it be MediaCentre or a PS3, the challenge with these is that they were always very physically centric solutions and they didn't move you as you left the building. A cloud solution gets rids of that problem and offers a whole heap of other opportunities.

Maybe Apple will go down the cram in the features route, but personally I hope they open their eyes to the world they've already created and look to leverage it in new an interesting ways. You can keep your 10 Mega Pixel camera, I want my media cloud.

Others can build clouds and hope that people will come, Apple can build a cloud that will have them queuing up to demand to be locked-in.

Technorati Tags: SOA, Service Architecture